[Crossposted from the Layar blog.]

At the end of his otherwise lovely keynote at ARE2011, Microsoft’s Blaise Aguera y Arcas proposes a distinction between “strong AR” and “weak AR”. Aguera’s obviously a very talented technologist, but in my opinion he’s done the AR industry a disservice by framing his argument in a narrow, divisive way:

“I’ll leave you with just one or two more thoughts. One is that, consider, there’s been a lot of so called augmented reality on mobile devices over the…past couple of years, but most of it really sucks. And most of it is what I would call weak augmented reality, meaning it’s based on the compass and the GPS and some vague sense of how stuff out there in the world might relate to your device, based on those rather crude sensors. Strong AR is when you, when some little gremlin is actually looking through the viewfinder at what you’re seeing, and it’s saying ah yeah that’s, this is that, that’s that and that’s the other and everything is stable and visual, that’s strong AR. Of course the technical requirements are so much greater than just using the compass and the GPS, but the potential is so much greater as well.”

Aguera’s choice of words invokes the old cognitive / computer science argument about “strong AI” and “weak AI” which was first posed by John Searle in the early heyday of 1980’s artificial intelligence research [Searle 1980: Minds, Brains and Programs (pdf)]. However, Searle’s formulation was a philosophical statement intended to tease out the distinction between an artificially intelligent system simulating a mind, or actually having a mind. Searle’s interest had nothing to do with how impressive the algorithms were, or how much computational power was required to produce AI. Instead, he was focused on the question of whether a computational system could ever achieve consciousness and true understanding, and Searle believed the concept of strong AI was fundamentally misguided.

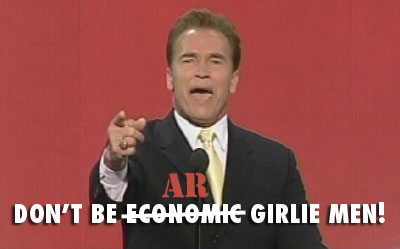

In contrast, Aguera’s framing is fueled by technical machismo. He uses strong and weak in the common schoolyard sense, and calls out “so-called augmented reality” that is “vague”, “crude”, and “sucks” in comparison to AR that is based on (gremlins, presumably shorthand for) sophisticated machine vision algorithms backed with terabytes of image data and banks of servers in the cloud. “Strong AR is on the way”, he says, with the unspoken promise that it will save the day from the weak AR we’ve had to endure until now.

In contrast, Aguera’s framing is fueled by technical machismo. He uses strong and weak in the common schoolyard sense, and calls out “so-called augmented reality” that is “vague”, “crude”, and “sucks” in comparison to AR that is based on (gremlins, presumably shorthand for) sophisticated machine vision algorithms backed with terabytes of image data and banks of servers in the cloud. “Strong AR is on the way”, he says, with the unspoken promise that it will save the day from the weak AR we’ve had to endure until now.

OK, I get it. Smart technology people are competitive, they have egos, and they like to toss out some red meat now and then to keep the corporate execs salivating and the funding rolling in. Been there, done that, understand completely. And honestly, I love to see good technical work happen, as it obviously is happening in Blaise’s group (check out minute 17:20 in the video to hear the entire ARE crowd gasp at his demo).

But here’s where I think this kind of thinking goes off the rails. The most impressive technical solution does not equate to the best user experience; locative precision does not equal emotional resonance; smoothly blended desktop flythroughs are not the same as a compelling human experience. I don’t care if your system has centimeter-level camera pose estimation or a 20 meter uncertainty zone; if you’re doing AR from a technology-centered agenda instead of a human-centered motivation, you’re doing it wrong.

Bruce Sterling said it well at ARE2010: “You are the world’s first pure play experience designers.” We are creating experiences for people in the real world, in their real lives, in a time when reality itself is sprouting a new, digital dimension, and we really should try to get it right. That’s a huge opportunity and a humbling responsibility, and personally I’d love to see the creative energies of every person in our industry focused on enabling great human experiences, rather than posturing about who has stronger algorithms and more significant digits. And if you really want to have an argument, let’s make it about “human AR” vs. “machine AR”. I think Searle might like that.