In the 2003 short paper “Creating and Experiencing Ubimedia“, members of my research group sketched a new conceptual model for interconnected media experiences in a ubiquitous computing environment. At the time, we observed that media was evolving from single content objects in a single format (e.g., a movie or a book), to collections of related content objects across several formats. This was exemplified by media properties like Pokemon and Star Wars, which manifested as coherent fictional universes of character and story across TV, movies, books, games, physical action figures, clothing and toys, and American Idol which harnessed large-scale participatory engagement across TV, phones/text, live concerts and the web. Along the same lines, social scientist Mimi Ito wrote about her study of Japanese media mix culture in “Technologies of the Childhood Imagination: Yugioh, Media Mixes, and Otaku” in 2004, and Henry Jenkins published his notable Convergence Culture in 2006. We know this phenomenon today as cross-media, transmedia, or any of dozens of related terms.

Coming from a ubicomp perspective, our view was that the implicit semantic linkages between media objects would also become explicit connections, through digital and physical hyperlinking. Any single media object would become a connected facet of a larger interlinked media structure that spanned the physical and digital worlds. Further, the creation and experience of these ubimedia structures would take place in the context of a ubiquitous computing technology platform combining fixed, mobile, embedded and cloud computing with a wide range of physical sensing and actuating technologies. So this is the sense in which I use the term ubiquitous media; it is hypermedia that is made for and experienced on a ubicomp platform in the blended physical/digital world.

Of course the definitions of ubicomp and transmedia are already quite fuzzy, and the boundaries are constantly expanding as more research and creative development occur. A few examples of ubiquitous media might help demonstrate the range of possibilities:

An interesting commercial application is the Nike+ running system, jointly developed between Nike and Apple. A small wireless pressure sensor installed in a running shoe sends footfall data to the runner’s iPod, which also plays music selected for the workout. The data from the run is later uploaded to an online service for analysis and display. The online service includes social components, game mechanics, and the ability to mashup running data with maps. Nike-sponsored professional athletes endorse Nike-branded music playlists on Apple’s iTunes store. A recent feature extends Nike+ connectivity to specially-designed exercise machines in selected gyms. Nike+ is a simple but elegant example of embodied ubicomp-based media that integrates sensing, networking, mobility, embedded computing, cloud services, and digital representations of people, places and things. Nike+ creates new kinds of experiences for runners, and gives Nike new ways to extend their value proposition, expand their brand footprint, and build customer loyalty. Nike+ has been around since 2006, but with the recent buzz about personal sensing and quantified selves it is receiving renewed attention including a solid article in the latest Wired.

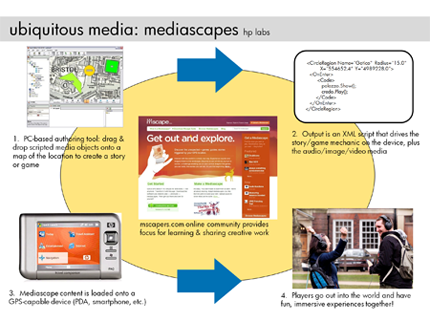

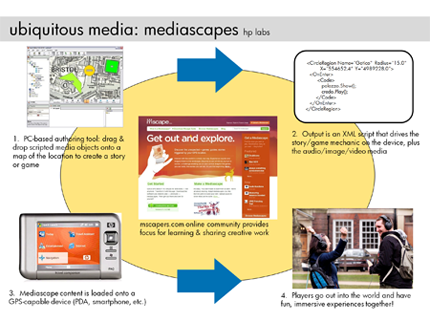

A good pre-commercial example is HP Labs’ mscape system for creating and playing a media type called mediascapes. These are interactive experiences that overlay audio, visual and embodied media interactions onto a physical landscape. Elements of the experience are triggered by player actions and sensor readings, especially location-based sensing via GPS. In the current generation, mscape includes authoring tools for creating mediascapes on a standard PC, player software for running the pieces on mobile devices, and a community website for sharing user-created mediascapes. Hundreds of artists and authors are actively using mscape, creating a wide variety of experiences including treasure hunts, biofeedback games, walking tours of cities, historical sites and national parks, educational tools, and artistic pieces. Mscape enables individuals and teams to produce sophisticated, expressive media experiences, and its open innovation model gives HP access to a vibrant and engaged creative community beyond the walls of the laboratory.

These two examples demonstrate an essential point about ubiquitous media: in a ubicomp world, anything – a shoe, a city, your own body – can become a touchpoint for engaging people with media. The potential for new experiences is quite literally everywhere. At the same time, the production of ubiquitous media pushes us out of our comfort zones – asking us to embrace new technologies, new collaborators, new ways of engaging with our customers and our publics, new business ecologies, and new skill sets. It seems there’s a lot to do, so let’s get to it.